Here Is A fast Cure For Deepseek Ai

페이지 정보

작성자 Margret Burrell 날짜25-02-23 02:55 조회2회 댓글0건본문

With its claims matching its efficiency with AI instruments like ChatGPT, it’s tempting to give it a attempt. By itself, it may give generic outputs. While it might perform similarly to models like GPT-four in certain benchmarks, DeepSeek distinguishes itself with lower prices, an open-source approach, and higher flexibility for developers. DeepSeek is extra efficient on account of its considerably decrease value per token, permitting businesses and developers to scale without high expenses. For startups and smaller companies that want to use AI but don’t have large budgets for it, DeepSeek R1 is a good selection. If you’re new to ChatGPT, examine our article on how to make use of ChatGPT to learn more concerning the AI instrument. ChatGPT is an AI language mannequin created by OpenAI, a research group, to generate human-like text and perceive context. ChatGPT evolves through continuous updates from OpenAI, specializing in enhancing performance, integrating user suggestions, and expanding actual-world use cases.

With its claims matching its efficiency with AI instruments like ChatGPT, it’s tempting to give it a attempt. By itself, it may give generic outputs. While it might perform similarly to models like GPT-four in certain benchmarks, DeepSeek distinguishes itself with lower prices, an open-source approach, and higher flexibility for developers. DeepSeek is extra efficient on account of its considerably decrease value per token, permitting businesses and developers to scale without high expenses. For startups and smaller companies that want to use AI but don’t have large budgets for it, DeepSeek R1 is a good selection. If you’re new to ChatGPT, examine our article on how to make use of ChatGPT to learn more concerning the AI instrument. ChatGPT is an AI language mannequin created by OpenAI, a research group, to generate human-like text and perceive context. ChatGPT evolves through continuous updates from OpenAI, specializing in enhancing performance, integrating user suggestions, and expanding actual-world use cases.

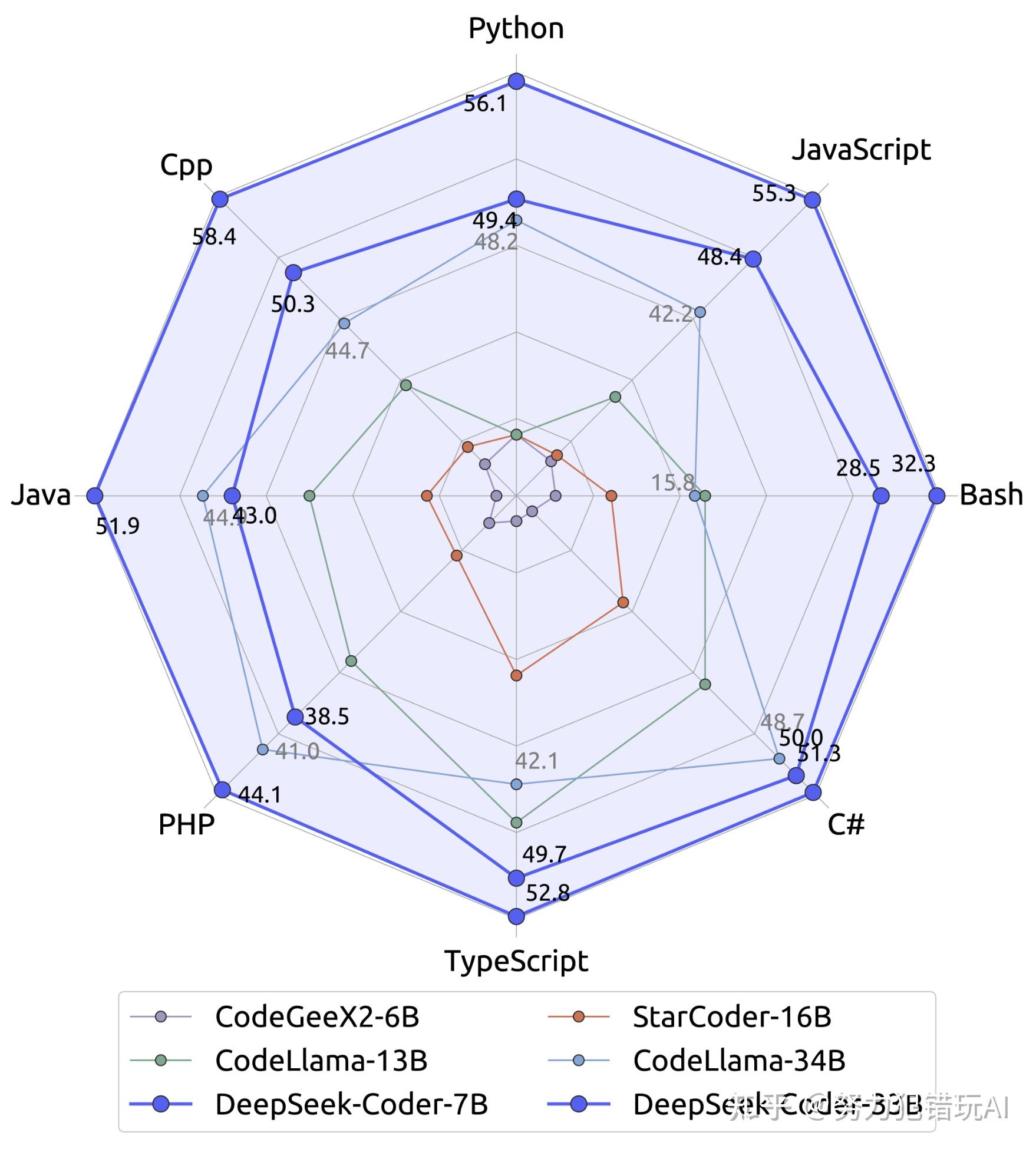

However, regardless of its spectacular capabilities, ChatGPT has limitations. However, what’s exceptional is that we’re evaluating one in all DeepSeek R1’s earliest fashions to considered one of ChatGPT’s advanced fashions. And this is applicable to almost all parameters we are comparing here. The company's current LLM fashions are DeepSeek-V3 and DeepSeek-R1. One in every of the primary options that distinguishes the DeepSeek LLM family from different LLMs is the superior efficiency of the 67B Base mannequin, which outperforms the Llama2 70B Base model in a number of domains, such as reasoning, coding, mathematics, and Chinese comprehension. From datasets and vector databases to LLM Playgrounds for model comparison and associated notebooks. The mannequin employs a self-consideration mechanism to process and generate text, permitting it to capture complex relationships within input data. This selective activation is made doable by means of DeepSeek R1’s progressive Multi-Head Latent Attention (MLA) mechanism. DeepSeek R1’s Mixture-of-Experts (MoE) structure is without doubt one of the extra advanced approaches to solving problems using AI. In numerous benchmark tests, DeepSeek R1’s performance was the identical as or near ChatGPT o1. As DeepSeek R1 continues to realize traction, it stands as a formidable contender within the AI panorama, challenging established players like ChatGPT and fueling additional developments in conversational AI expertise.

However, regardless of its spectacular capabilities, ChatGPT has limitations. However, what’s exceptional is that we’re evaluating one in all DeepSeek R1’s earliest fashions to considered one of ChatGPT’s advanced fashions. And this is applicable to almost all parameters we are comparing here. The company's current LLM fashions are DeepSeek-V3 and DeepSeek-R1. One in every of the primary options that distinguishes the DeepSeek LLM family from different LLMs is the superior efficiency of the 67B Base mannequin, which outperforms the Llama2 70B Base model in a number of domains, such as reasoning, coding, mathematics, and Chinese comprehension. From datasets and vector databases to LLM Playgrounds for model comparison and associated notebooks. The mannequin employs a self-consideration mechanism to process and generate text, permitting it to capture complex relationships within input data. This selective activation is made doable by means of DeepSeek R1’s progressive Multi-Head Latent Attention (MLA) mechanism. DeepSeek R1’s Mixture-of-Experts (MoE) structure is without doubt one of the extra advanced approaches to solving problems using AI. In numerous benchmark tests, DeepSeek R1’s performance was the identical as or near ChatGPT o1. As DeepSeek R1 continues to realize traction, it stands as a formidable contender within the AI panorama, challenging established players like ChatGPT and fueling additional developments in conversational AI expertise.

While each fashions perform well for tasks like coding, writing, and drawback-fixing, DeepSeek stands out with its Free DeepSeek entry and significantly decrease API prices. Enhanced Writing and Instruction Following: Deepseek Online chat online-V2.5 affords enhancements in writing, generating more pure-sounding text and following complex instructions more effectively than earlier versions. This strategy permits DeepSeek R1 to handle complicated tasks with remarkable effectivity, typically processing info as much as twice as quick as conventional models for tasks like coding and mathematical computations. DeepSeek: Less integrated into mainstream applications however extremely effective for specialised duties. Unlike ChatGPT, which has costly APIs and utilization limitations, DeepSeek affords free access to its core functionality and lower pricing for bigger applications. Treasury’s IT team has additionally strengthened its firewall to dam access to each the DeepSeek v3 app and web site, aligning with a broader cybersecurity technique that actively screens threats to state financial techniques. AI fashions vary in how a lot access they permit, ranging from totally closed, paywalled programs to open-weight to utterly open-supply releases.

By making these assumptions clear, this framework helps create AI systems which are more truthful and dependable. ChatGPT enjoys wider accessibility by means of numerous APIs and interfaces, making it a popular selection for a lot of functions. But, it may be integrated into purposes for customer support, digital assistants, and content creation. But, this also means it consumes important quantities of computational energy and energy sources, which is not only costly but also unsustainable. Janus-Pro is 7 billion parameters in dimension with improved training pace and accuracy in textual content-to-image technology and process comprehension, DeepSeek’s technical report read. With a staggering 671 billion total parameters, DeepSeek R1 activates solely about 37 billion parameters for each activity - that’s like calling in just the best specialists for the job at hand. With 175 billion parameters, ChatGPT’s architecture ensures that every one of its "knowledge" is out there for every job. Rather, it employs all 175 billion parameters each single time, whether or not they’re required or not.

댓글목록

등록된 댓글이 없습니다.